Projects

Homogeneous and Heterogeneous 3D Face Recognition in Unconstrained Conditions

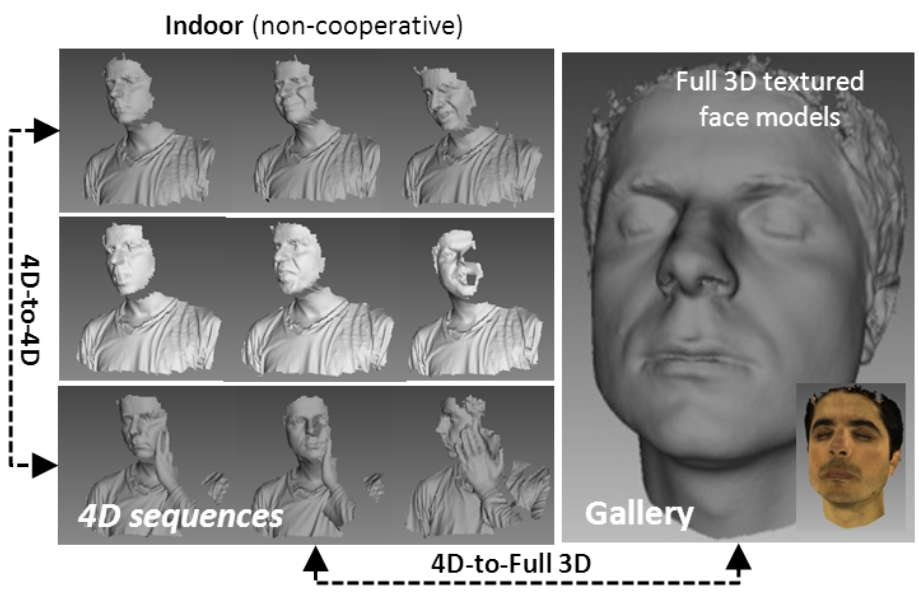

This project systematically studies homogeneous and heterogeneous 3D face recogni-tion in uncontrolled cases, e.g. freely moving faces when talking, walking, and con-tinuously changing head pose, including 4D face data preprocessing (de-noising, face detection, landmarking, pose estimation, reconstruction using low quality models, etc.), only shape based 4D face recognition, textured 4D face recognition, 3D-2D heteroge-neous face recognition, and 4D-3D heterogeneous face recognition. These contents cover all the critical problems of 3D face recognition in the real world, and extend the traditional static 3D face recognition to 4D homogeneous face recognition and 3D re-lated heterogeneous face recognition. This research comprehensively improves the theoretical framework of 3D face recognition which involves some issues in the 2D and 4D domains as well, and gives fundamental support to the application of 3D face recognition in the real condition.

Image Implicit Semantics Understanding in Social Media

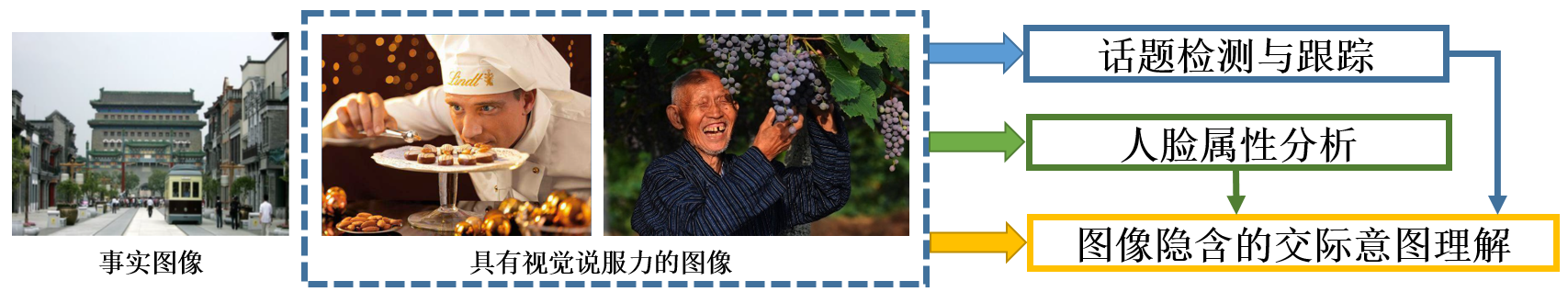

In social media, the goal of image implicit semantics understanding is to analyze the views, intents, etc. that users want to express in their posted images. It is extremely important for many real-world applications, such as public opinion monitoring, user abnormal behavior detection, etc. However, the automatic understanding of image implicit semantics is a research task just beginning to be explored in the literature. For understanding image implicit semantics in social media , topic detection and tracking, face attribute analysis, and communicative intent understanding are three key issues. So, this project will focus on the research of these issues. For topic detection and tracking, we analyze the multi-modal data jointly to model the semantic structures of topics and generate meaningful event descriptions. For image based facial attribute analysis, we exploit rich contexts in images for facial expression recognition and age estimation. For image communicative intent understanding, we automatically extract features based on deep learning methods, and analyze images containing various types of people and events.

Scene-aware Object Description and Recognition in Videos

This project targets on fine-grained pedestrian and vehicle description in surveillance videos, including object detection, tracking, and recognition techniques. In particular, it aims to address the challenges caused by large variations of objects and back-grounds. Considering that objects are of different scales with complex relationships, scene knowledge is highlighted and jointly modeled through specific deep learning networks as well as corresponding loss functions. This facilitates the theory frame-work of deep learning based video analysis and improves the performance of object attribute prediction.