Research

Research

General Visual Model Training and Reasoning

Visual Generation

Object Detection

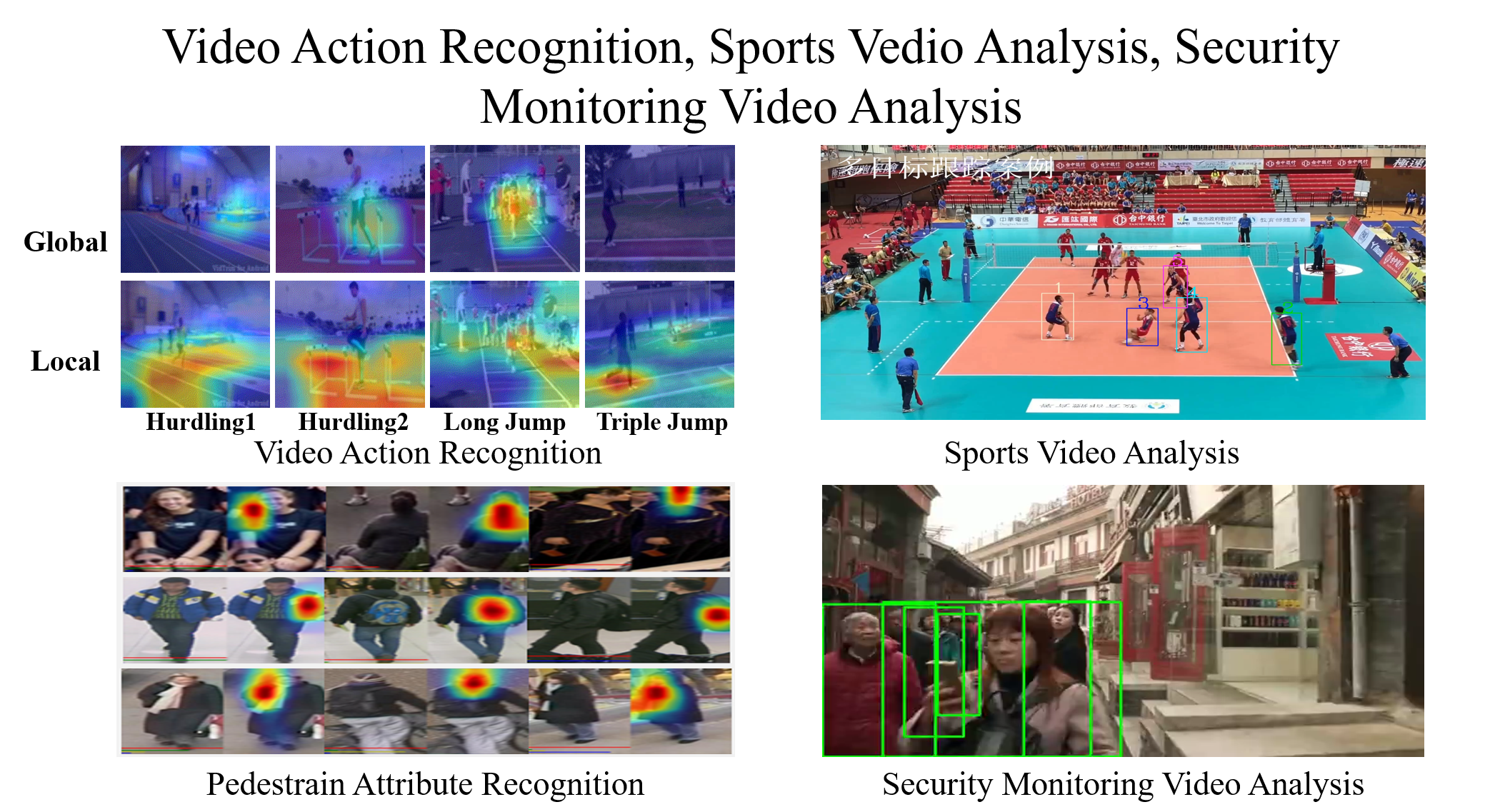

Intelligent Video Analysis

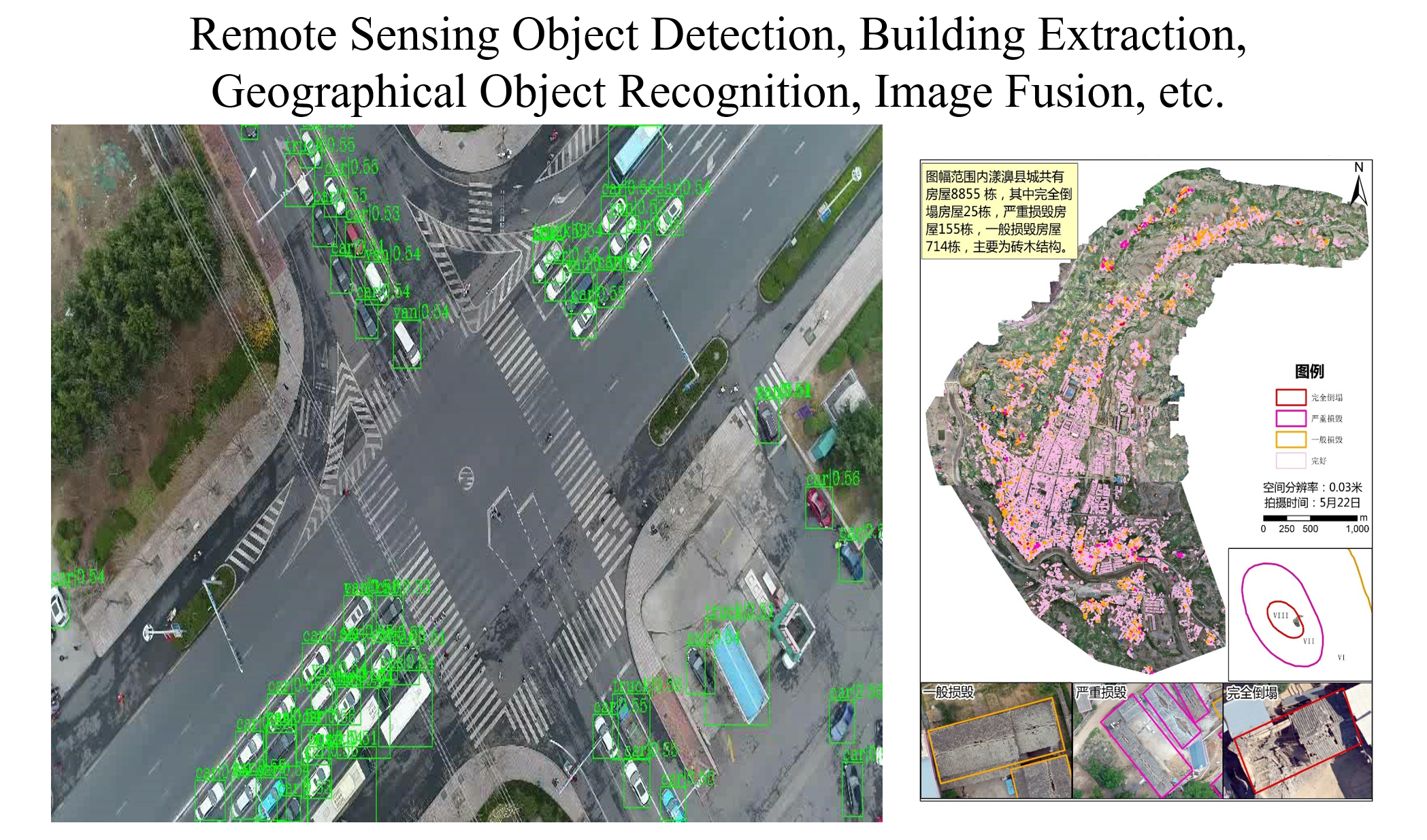

Remote Sensing Image Processing

Multimodal Document Understanding

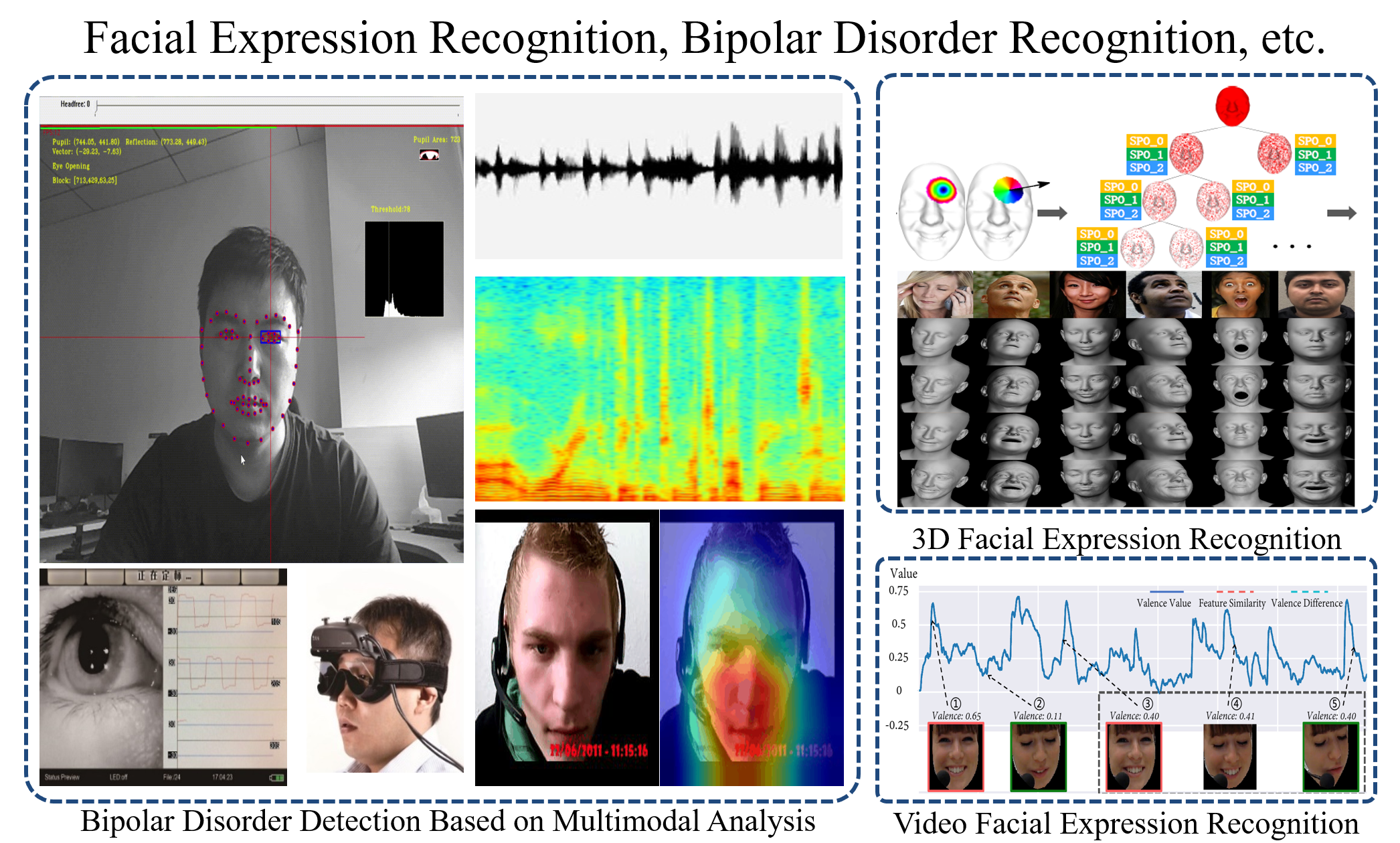

Affective Computing

Artificial Intelligence Security

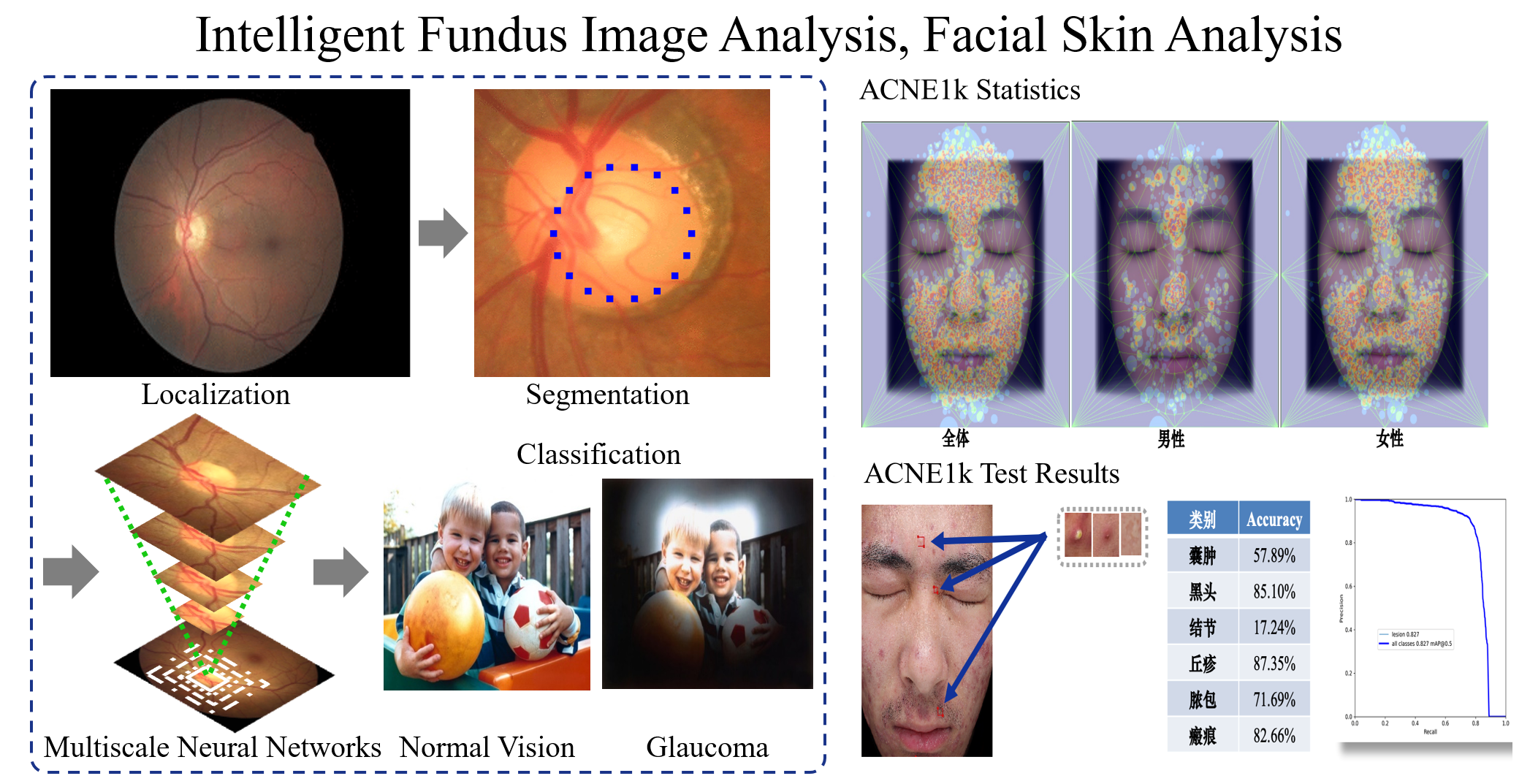

Medical Image Processing